- Supercool

- Posts

- 🌐 Can AI Save AI Infrastructure? Cutting Energy, Water, and Wear in AI Data Centers

🌐 Can AI Save AI Infrastructure? Cutting Energy, Water, and Wear in AI Data Centers

Last November, I toured an AI data center in Las Vegas with Schneider Electric. Row after row of server racks. Loud, climate-controlled rooms. More security guards than operations staff.

What struck me most: you can't actually see what's happening.

The servers hum. Lights blink. But the real action—the complex choreography of chillers, cooling towers, CRA units, valves, and fans keeping everything running—happens behind walls and under raised floors. Invisible to everyone except the engineers tasked with optimizing it.

And we're about to build a lot more of it.

JPMorgan projects $5 trillion in AI infrastructure buildout by 2030. Everyone's talking about how much power these data centers will consume. Almost no one is discussing how much carbon will be emitted by manufacturing all that hardware in the first place.

Scope 3 emissions—the embodied carbon from producing servers, semiconductors, racks, cooling systems, and power equipment—have an enormous impact. So much so that Microsoft abandoned its 2030 climate targets in 2024 because of the embodied carbon associated with data center construction and hardware manufacturing (Note: Last year, Microsoft said it remains pragmatically optimistic).

"I read the Microsoft Sustainability Report, and it was the first report where they admitted, hey, we can't commit to the 2030 climate neutral goals anymore because of our data centers," said Jasper de Vries, CEO of Lucend. "But it was not because of the CO2 emissions from running the data centers. It was all due to their Scope 3 emissions. All of the hardware that goes into the data centers."

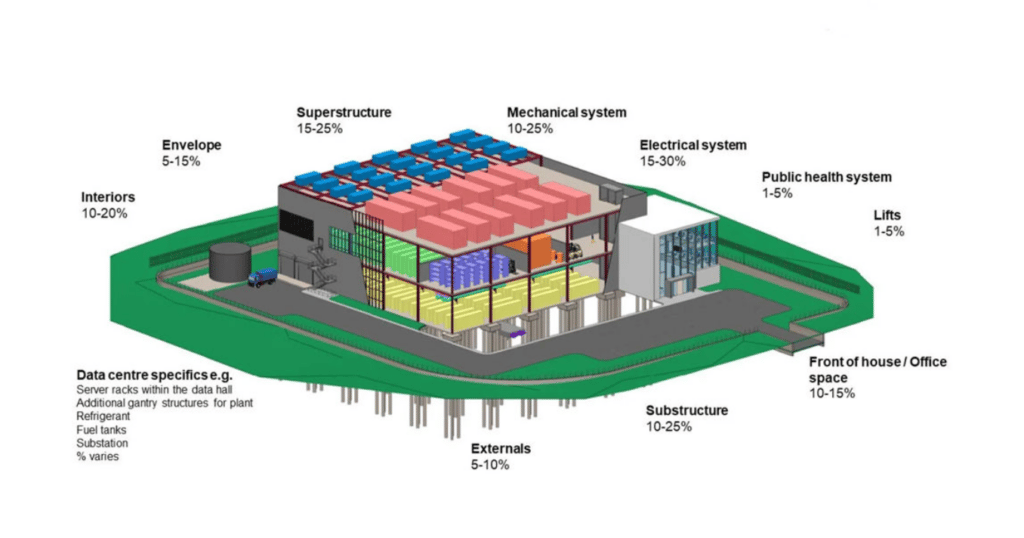

Approx. breakdown of embodied carbon in data center construction. Mechanical and Electrical systems make up 25-55%. Source: Procore

This week, I spoke with Jasper about a different approach to data center efficiency—one that addresses both operational energy and the hardware replacement cycle at once.

The Hardware Efficiency Ceiling

For decades, data centers pursued efficiency through better equipment. More efficient chillers. Advanced cooling systems. Smarter power supplies. That playbook works for a time.

Physical limits constrain how efficient individual components can become. Plus, hardware innovation cycles take years—new equipment designs, testing, manufacturing, and deployment, while AI data centers are being built right now.

Meanwhile, data center complexity is increasing. Modern AI factories operate at scales that dwarf traditional data centers. More components. More sensors. More interdependencies.

That complexity creates the opportunity.

The Butterfly Effect

Seven years ago, Jasper was consulting for a major European data center. His background was in data and analytics, not mechanical engineering. He'd used AI across healthcare, insurance, food chains, and marketing. But data centers fascinated him for two reasons.

First: how fundamental yet invisible they are. Nobody knew what data centers were or how they enable everything from Zoom calls to Netflix streams.

Second: the data itself. Unlike healthcare or marketing, data center sensor data can't be used against people. No privacy concerns. Just machine data revealing optimization opportunities.

Then he had the flash of insight that would lead to Lucend.

"I remember the very first time when we just got started, and we had all of this sensor data," Jasper said. "My background is in decision support systems and complexity theory, where the butterfly flaps its wings in Japan causes a hurricane in South America. The data center is a complex system in itself."

"In that first data center, we looked at the data and I remember—something happened at the roof, something happened at the chiller, and we could see the system respond in the rooms all the way down to the valves of certain assets."

Something changes on the roof. It cascades through 300 billion sensor readings down to valves in server rooms.

No human operator can track those relationships. The system is too complex. Too many variables are changing simultaneously.

But software can.

From Symptoms to System Optimization

Lucend ingests sensor data from mechanical and electrical infrastructure—fan speeds, valve openings, floor pressures, power consumption, temperature set points—and uses machine learning to do three things:

Identify symptoms across the data center infrastructure

Combine those symptoms into diagnostics

Generate specific operator recommendations

One facility manager reduced power usage effectiveness (PUE) by 40% in a single year—from 1.6 to 1.36—saving $4.3 million.

Still, the deeper value shows up in hardware life extension.

Take CRA units—computer room air conditioning units that blow cold air under raised floors toward servers. Lucend's models simulate how average fan speed changes when units are turned on or off.

The counterintuitive finding: turning on more units often saves energy. When more units are active, each fan spins more slowly.

Slower fan speeds mean two things: lower operational energy and reduced wear and tear.

That's where operational energy meets Scope 3 emissions—extending hardware life delays replacement decisions worth millions of dollars per facility per year, while also avoiding embodied carbon emissions from manufacturing new equipment.

Product-Market Fit

Lucend doesn’t have to knock on doors. Data centers come to them.

The pitch is simple: hand over your existing sensor data. Within six weeks, you'll receive recommendations to improve performance, reliability, power consumption, water use, and the CO2 footprint.

Digital Realty, which operates over 300 data centers, uses Lucend to help achieve carbon neutrality by 2030. So does T5. Lucend’s platform is now deployed across 50+ data centers globally—Melbourne, Singapore, Frankfurt, Paris, Dublin, and Chicago.

Every new data center makes the system smarter. River cooling in one location. Exotic chiller configurations in another. Different climate zones. Different designs.

"We have a suitcase filled with optimization models," Jasper said. "Whenever you come up to us and ask, hey, how can you help us? If you have a chiller, we already have a whole bunch of optimization models that optimize your chillers from all of these different angles."

The network effect compounds. Each deployment adds data. Better data improves the models. Better models attract more customers.

And it scales at software speed. Vegas today, Chicago tomorrow.

The Business Model Writes Itself

What drives Jasper and his team—saving megawatt hours of energy—connects directly to customer goals.

"Every megawatt hour that we save has a positive benefit for our customers," Jasper said. "We are fortunate enough to be working with a type of solution where we can link societal benefits or sustainability benefits directly to monetary benefits, but also reliability and risk decrease for our customers."

The sustainability metric is the business metric. No trade-offs.

Lucend charges a fee based on the data center's IT capacity. The more energy they save, the more value customers see. The longer they extend hardware life, the more replacement costs customers avoid.

Supercool Takeaway

The $5 trillion AI buildout will create massive embodied carbon from manufacturing hardware. Software can unlock system complexity to address both operational energy and hardware replacement at the speed this moment demands.

Operator Takeaways

System optimization beats component efficiency. Physical hardware has limits. Software managing system complexity doesn't, and it scales at the speed the market demands.

Extend hardware life, not just reduce power. Solutions that address both operational energy and asset wear tackle operational and embodied carbon simultaneously.

Network effects compound in infrastructure. Each new deployment across different climates and designs makes the product more valuable to everyone.

This Week’s Podcast Episode

Can AI Save AI Infrastructure? Cutting Energy, Water, and Wear in AI Data Centers

🎙️ Listen on Apple, Spotify, YouTube, and all other platforms.

↓

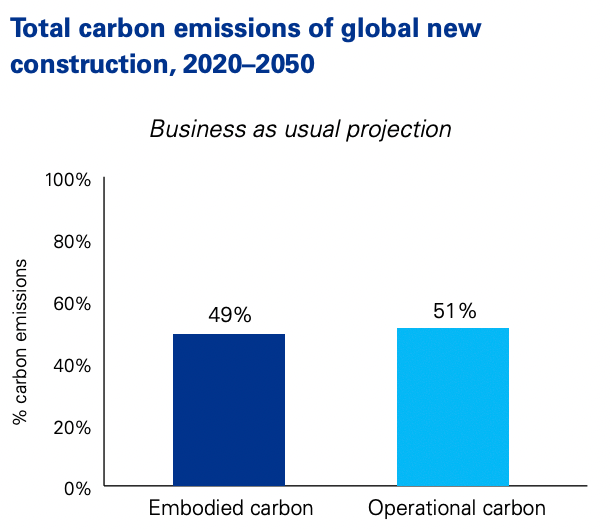

Stat of the Week: 49%

Between 2020 and 2050, nearly half of all carbon emissions from new construction will come from embodied carbon—the emissions released making, shipping, and installing building materials—not from operating the buildings themselves.

Source: KPMG

Quote of the Week:

One of our operators said, ‘Wow, I'm gonna train all my new operators on your tool because they now understand how this facility actually works.’

↓

3 Ways Data Center Owners Are Cutting Carbon

Data center operators are taking a comprehensive approach to cut emissions. Here are three operational strategies gaining traction.

1. Capturing Waste Heat

Data centers generate massive amounts of heat that typically gets vented into the air. Some operators now pipe that heat into district heating systems for nearby buildings or redirect it to industrial processes. The approach cuts operational waste while delivering useful thermal energy where it's needed.

Companies to watch:

Equinix - Operational projects heating homes in Canada and Paris, including the Olympic Aquatics Centre

Amazon - Ireland facility saved 1,100 tonnes CO2 in first year heating local buildings

Stockholm Data Parks - Swedish initiative warming homes across the city

2. Deploying Liquid Cooling

Air cooling hits physical limits as server power density increases. Liquid cooling systems absorb heat directly from chips, dramatically reducing energy consumption while keeping equipment cooler. Cooler hardware lasts longer, delaying expensive replacements.

Companies to watch:

Microsoft - Azure AI clusters running on liquid cooling infrastructure

Digital Realty - Paris and Singapore facilities operational with liquid cooling for AI workloads

Iceotope - Immersion cooling deployments across hyperscale and edge data centers

3. Using Modular Construction

Factory-built data center modules reduce construction waste and embodied carbon compared to traditional building methods. Standardized designs enable faster deployment in months instead of years, with lower material use and more predictable costs.

Companies to watch:

Schneider Electric - Factory-tested modular systems deployed globally across three continents

BladeRoom Data Centres - UK-based pioneer in rapid-deployment modular facilities

Vertiv - Delivering 100kW modular units operational in 25 weeks

↓

PODCASTS

The Kind Leader: Leading with Optimism — Josh Dorfman on Making Climate Solutions Human, Scalable & Enduring

The Curious Captalist: Josh Dorfman (Supercool)

↓

Interested in Advertising with Supercool?

Connect with future-forward decision-makers seeking next-gen climate innovations. Reach out to discuss how Supercool’s platform can help. Just hit reply to this email.

↓

Not yet subscribed to Supercool?

Click the button below for weekly updates on real-world climate solutions that cut carbon, boost the bottom line, and improve modern life.

🌐